Thesis Topics, Student Projects & Open Positions

Thesis Topics

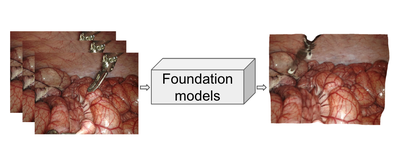

Master Thesis: Adapting Foundation Models for Surgical Outcome Prediction

Foundation models, such as BiomedParse, have demonstrated exceptional generalization capabilities across various tasks using multimodal healthcare data. In this project, we want to finetune such foundation models for predicting surgical outcomes with limited available data. We also want to investigate techniques for fusing domain knowledge during the finetuning process, and measure the impact of domain knowledge on model performance and interpretability. (contact)

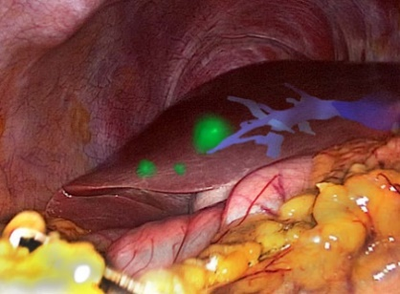

Bachelor Thesis: Rendering Methods for Correct Depth Perception in Augmented Reality Overlays

In potential navigation systems for minimally invasive surgeries, models created by CT and MRT scans are rendered and overlaid transparently to be shown on the endoscopic video stream. This might lead to sorting errors, because occlusions that usually work as visual cues for depth perception are ambiguous due to the transparent rendering. Explore and implement different rendering methods that could help users in the correct interpretation of the augmented reality overlay. (contact)

Master Thesis: Hybrid Representation for 3D Surgical Scene Reconstruction

Accurate 3D reconstruction of surgical environments is crucial for robotic-assisted surgery, providing precise spatial awareness of anatomical structures and surgical instruments. Traditional 3D representations—such as explicit meshes, volumetric grids, and neural implicit models—have their own strengths and weaknesses.

This project aims to develop a hybrid 3D reconstruction approach that combines the strengths of different 3D representations to improve reconstruction accuracy, efficiency, and adaptability in surgical settings.

Related skills: Computer vision, 3D reconstruction, PyTorch.(contact)

Master Thesis: 4D Gaussian Splatting for Surgical Scene Reconstruction Using Foundation Models

Accurate 3D reconstruction of surgical environments is crucial for robotic-assisted surgery, providing real-time spatial awareness of anatomical structures and surgical instruments. However, reconstructing deformable soft tissues poses challenges due to dynamic changes, occlusions, and lighting variations.

This project explores 4D Gaussian Splatting, an advanced technique that models dynamic scenes efficiently by representing them as time-aware Gaussian primitives. By leveraging a foundation model, the goal is to enhance reconstruction quality, improve generalization across different surgical scenarios, and enable real-time rendering.

Related skills: Computer vision, 3D reconstruction, PyTorch. (contact)

Master Thesis: Camera Localization for Laparoscopic Surgeries

SLAM (Simultaneous Localization and Mapping) plays a crucial role in robotic-assisted surgery by enhancing 3D scene understanding, precise navigation, and spatial awareness of surgical instruments. However, traditional SLAM methods struggle in surgical environments due to soft tissue deformation, making accurate localization challenging.

This project aims to tackle this problem by utilizing implicit 3D reconstruction for camera localization in surgical scenes. We seek to improve localization accuracy, even in dynamically changing anatomy, leading to safer and more precise minimally invasive procedures.

Related skills: Computer vision, SLAM, neural implicit representations (e.g., NeRF, Gaussian Splatting), PyTorch. (contact)

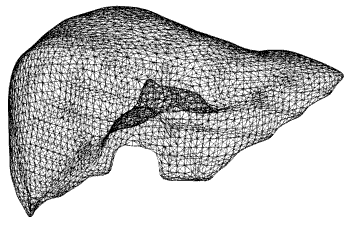

Master Thesis: Remeshing for Soft Tissue Simulation using Reinforcement Learning

In biomechanical simulations, the accuracy of soft tissue deformation highly depends on the quality of the mesh. To ensure high precision, remeshing is required after each simulation step to maintain element quality and improve numerical stability. Traditional remeshing techniques can be computationally expensive and challenging to optimize.

This project aims to explore reinforcement learning (RL) as an adaptive remeshing strategy, where an intelligent agent learns to optimize the mesh dynamically. By integrating RL, we hope to develop an efficient and automated remeshing framework that enhances simulation accuracy while minimizing computational costs.

Related skills: Reinforcement learning, fundamental knowledge about finite element methods (FEM), PyTorch. (contact)

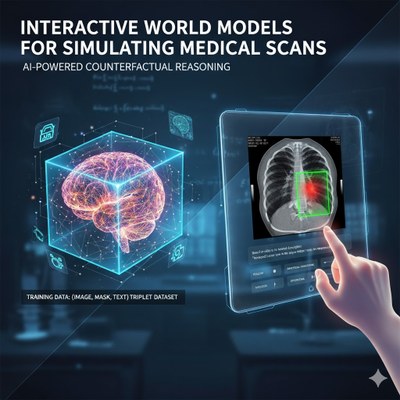

Master Thesis: Interactive World Models for Simulating Medical Scans

Our lab is developing a state-of-the-art generative world model that learns an internal simulation of medical imaging (MRI, CT, pathology). By training on a unique (image, mask, text) triplet dataset and leveraging a powerful pre-trained foundation model, this project focuses on enabling interactive, counterfactual reasoning. The goal is to build a model that can realistically re-render a medical scan and its segmentation mask based on edits to its textual description, allowing researchers to simulate "what-if" scenarios in a visually intuitive way.

Requirements:

- Strong background in deep learning and PyTorch

- Experience with multimodal data (e.g., vision, language, medical imaging)

- Familiarity with distributed training or large-scale model training is a plus

- Self-driven and able to manage a complex research pipeline (contact)

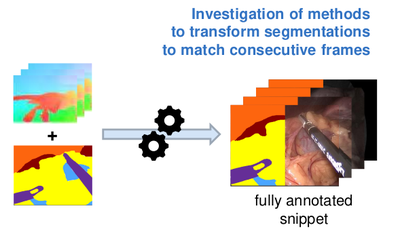

Investigating Tracking and Optical Flow for Scene Segmentation in Videos

Develop and evaluate tracking methods or optical flow techniques to propagate full-scene segmentations from a single video frame onto subsequent frames.

The project aims to generate additional annotations by leveraging temporal consistency in video data, reducing the need for extensive manual labeling.

The topic includes challenges such as handling occlusions, dynamic object movements, and ensuring accurate alignment across frames. This work will

contribute to improving annotation efficiency in large-scale video datasets. (contact)

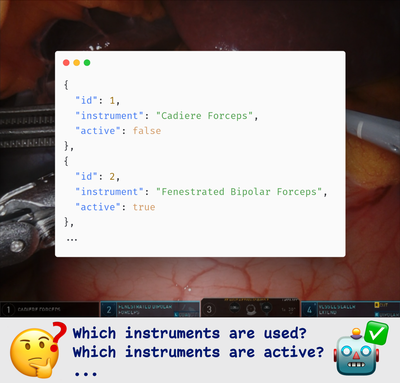

Master Thesis: HUDini: Automated Metadata Extraction from the da Vinci Xi Heads-Up Display

Collections of surgical videos recorded with the da Vinci Xi system include the on-screen heads-up display (HUD), which contains valuable information such as mounted instruments, their activation state, and other intraoperative context. However, this information is currently locked inside the video stream and not available as structured metadata. In this project, you will (1) systematically analyze which types of HUD information can be extracted and assess their clinical relevance and feasibility, and (2) develop a prototype pipeline to automatically infer a selected subset of this information from surgical videos. The resulting metadata will enrich existing datasets and provide a foundation for advanced machine learning applications in surgical data science.

Requirements:

- Good proficiency in Python

- Experience with computer vision (e.g. OpenCV, image/video analysis)

- Familiarity with PyTorch or another deep learning framework

- Interest in surgical data science; no prior medical knowledge required (contact)

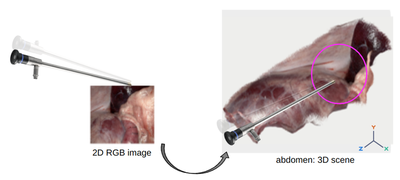

Master Thesis: Learning Precise Manipulation with 3D Imitation Learning

Recent imitation-learning-based approaches have shown remarkable results in learning surgical robotic tasks autonomously, when equipped with deep learning models. However, these successes rely on multi-camera setups, likely not feasible in Minimally-Invasive-Surgical (MIS) settings due to space constraints. Leverage Foundation Stereo to create a 3D point cloud of the MIS scene (link) and use it to render multiple virtual views (link), then train a state-of-the-art imitation learning model (Diffusion Policy, Action-Chunking-Transformer) to predict keypoints for the robot path planning in the needle driving task (in the picture).

Required skills:

- Good proficiency in python.

- Previous experience with pytorch and deep neural networks.

- Knowledge of CV concepts like depth estimation, camera calibration, etc. is a plus.

- No prior knowledge of imitation learning or robotics is needed(contact)

Master Thesis / Research Project: Imitation Learning with flow matching in surgical robotics

Building upon recent advancements in generative models, use conditional flow matching for visuomotor imitation learning of surgical sub-tasks like needle driving or pick-and-place. A recent line of work builds on successes in diffusion models for imitation learning. It has been hinted here (link) that simplicity of flow matching objectives allows favorable performance in stable training and generation quality compared to stochastic denoising diffusion process. Build an imitation learning algorithm using flow matching inspired by this work (link) and benchmark its performance with respect to diffusion policy learning and other simpler frameworks.

Required skills:

- Good proficiency in python and pytorch

- Basic mathematical understanding of deep generative modeling and ODEs/SDEs

- Previous experience in imitation/reinforcement learning is a plus.

- No prior knowledge of robotics is required! (contact)

Master Thesis: Recognition of phase and action triplets in laparoscopic video and sensor data

In order to take the next steps in automating surgical tasks in laparascopy, robot assistance systems have to gain a deeper understanding of the surgical procedure. In this project we aim to solve the problem of detecting and predicting fine grained surgical actions. Make use of supervised deep learning methods and annotated video/sensor data. Compare different model architectures to investigate the relevance of temporal information for the learning task. (contact)

Positions

- SHK: Implementation of small intestine stretching and elongation simulation in SOFA

- Master thesis: OCT data synthesis with diffusion models

- SHK: Software Developer to reengineer Surgical Training Box

How to apply?

Are you interested in our research and would like to do an undergraduate research project, bachelor, master or diploma thesis? Or are you looking for a PhD position in our department? Our young, highly motivated and creative team is always open for new talent. For more possible positions and thesis topics, you can always send an initiative application.

Please email to Mrs. Abdel Bary (ricarda.abdel-bary(at)nct-dresden.de) with your application and include the following in a single PDF file:

- A motivational letter describing why you want to join our group. Also mention if you are interested in a specific project and how your experience/education relates to this project

- A short CV, including an overview of your programming skills

- A recent overview of grades from your studies, include final grades if available

- A copy of your school graduation certificate

- Letters of references (if any)