Data-driven Surgical Analytics and Robotics

Data-driven surgical analytics and robotics involve the use of machine learning, data analytics, and robotics to improve surgical outcomes and enhance the capabilities of surgical systems. The goal of our research in this field is to bridge the current gap between robotics, novel sensors, and artificial intelligence to provide assistance along the surgical treatment path by quantifying surgical expertise and making it accessible to machines in order to improve patient outcomes. To achieve the goal, we leverage our full regional advantage by working very closely in an interdisciplinary setting with different partners, in particular surgical experts, relying on the existing infrastructure (including a fully equipped experimental OR and simulation room as well as imaging platforms).

Our research of data-driven surgical analytics involves the collection and analysis of large amounts of surgical data, such as surgical images, videos, and patient records, that is then used to develop predictive models, identify patterns and trends, and optimize surgical workflows. On the robotics side, the key areas of our research include surgical workflow optimization, autonomous surgery, robotic guidance and assistance, surgical training and simulation, as well as novel human-machine interaction concepts.

Research Topics

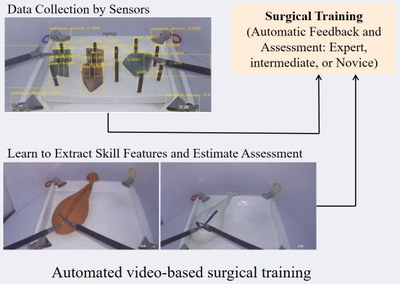

In order to ensure high-quality patient care and improve patient outcomes, it is crucial to train medical personnel effectively. This especially holds for challenging surgical techniques such as laparoscopic or robot-assisted surgery, or for critical scenarios such as patient resuscitation. For this reason, we investigate how to enhance conventional medical training by means of modern technology and machine learning algorithms. In particular, we use a variety of sensor modalities to perceive the physician-in-training’s actions and novel machine learning algorithms to analyze the collected sensor data. Our focus is the development of smart algorithms to provide automatic constructive feedback to novice medical personnel along with an automatic objective assessment of their skill level. The goal is to allow the trainees to adjust their performance and improve their skills more quickly in real time during training.

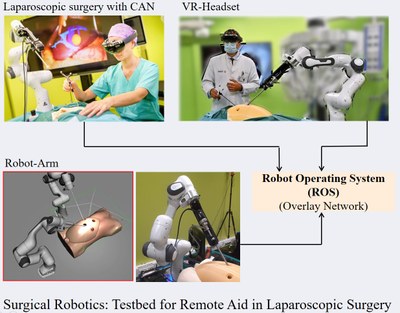

Robot-assisted surgery provides many advantages for surgeons, such as stereo vision, improved instrument control, and better ergonomic setup. It offers many potential benefits for patients, including greater precision, less invasive procedures, and reduced risk of complications. However, there are also challenges associated with the use of robotic systems and realizing these benefits for both surgeons and patients. Our research into robot-assisted surgery focuses on teleoperation, tele-testration, and human-machine-interaction. Overall the research tasks of our group in this field include:

- Testbed for remote aid in laparoscopic surgery built on multiple state-of-the-art lightweight industrial robot arms

- Future network technologies to control surgical robots over long-distance and intermittent connections

- Development of tactile devices until prototype to enable fine feedback in laparoscopic surgery

- Novel methods for autonomous camera navigation

- Integration of innovative devices and sensors (data-glove, VR-Headsets, force sensors) into the surgical workflow

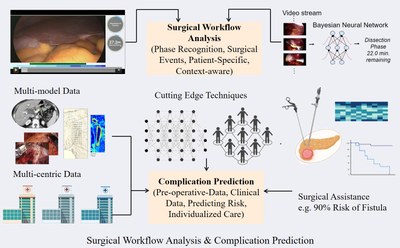

One of the main goals in the field of Computer-assisted Surgery (CAS) is to provide context-aware assistance, i.e. to automatically provide useful information in real-time to the surgical team. In order to build such systems, an understanding of patient-specific characteristics, the current state of the surgery, as well as anticipation of possible future outcomes are required. With this goal in mind, we develop methods for surgical workflow recognition and anticipation as well as pre-operative data analysis. The already developed cutting-edge deep learning and computer vision methods by our group can be used in the area of video and scene understanding, active learning, weakly- and semi-supervised learning, graph neural networks, federated learning, and multimodal data.

Some of our current projects include:

- Surgical Phase Recognition: Given a video stream of a surgical procedure (laparoscopic/robotic), we aim at continuously recognizing and updating the current phase of surgery.

- Anticipation of Surgical Events: Given a video stream of a surgical procedure (laparoscopic/robotic), we aim at predicting the occurrence of surgical events before they happen. This includes events such as instrument usage, the start of the next surgical phase, or the remaining duration of the procedure. In the future, anticipating more complex events such complications could be targeted. Anticipation systems are a requirement for many applications of assistance systems such as (semi-)autonomous robotic assistance, instrument preparation, or OR planning.

- Pre-operative Prediction of Complications: Given pre-operative imaging data (e.g. CT, PET, MRI) and clinical data, we aim at predicting the risk for certain post-operative complications. Use cases include individualized care using risk-based stratification of patients, surgical planning, or treatment planning.

Group Members

- Sebastian Bodenstedt (Postdoc, Group Leader)

- Maxime Fleury (Scientific Software Development)

- Gregor Just (Scientific Software Development)

- Nithya Bhasker (Doctoral Student)

- Isabel Funke (Doctoral Student)

- Dominik Rivoir (Doctoral Student)

- Alexander Jenke (Doctoral Student)

- Ariel Antonio Rodriguez Jimenez (Doctoral Student)

- Stefanie Krell (Doctoral Student)

- Max Kirchner (Doctoral Student)

- Hanna Möllhoff (Doctoral Student)

- Kevin Wang (Doctoral Student)

- Susu Hu (Doctoral Student)

- Martin Lelis (Doctoral Student)

- Stefanie Speidel (Professor, PI)